- 152

- 3 014 024

Ox educ

Приєднався 3 чер 2014

A channel committed to producing the highest quality free academic content for a worldwide audience. All content is produced on a not-for-profit basis by students at Oxford University. Visit www.ox-educ.com for more information.

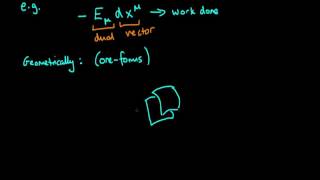

An introduction to vectors and dual vectors

Provides an introduction (with examples) of vectors and dual vectors, and discusses how their components transform under changes of coordinates. For more information on econometrics and Bayesian statistics, see: ben-lambert.com/

Переглядів: 30 792

Відео

What are dual vectors?

Переглядів 19 тис.6 років тому

Provides an overview of dual vectors and explains how they behave. In doing so, we shall explain how to visualise dual vectors. For more information on econometrics and Bayesian statistics, see: ben-lambert.com/

How to visualise a one-form

Переглядів 21 тис.7 років тому

Provides insight into how to visualise one-forms motivated through examples. For more information on econometrics and Bayesian statistics, see: ben-lambert.com/

Cauchy Schwarz Inequality Proof part 2

Переглядів 3,1 тис.9 років тому

For more information on econometrics and Bayesian statistics, see: ben-lambert.com/

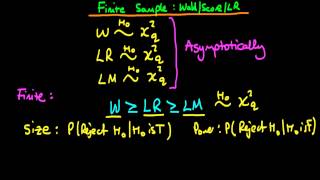

Finite sample properties of Wald + Score and Likelihood Ratio test statistics

Переглядів 2,3 тис.9 років тому

For more information on econometrics and Bayesian statistics, see: ben-lambert.com/

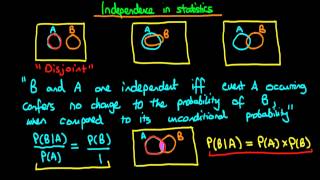

Independence in statistics an introduction

Переглядів 6 тис.9 років тому

For more information on econometrics and Bayesian statistics, see: ben-lambert.com/

Cauchy Schwarz Inequality Proof

Переглядів 17 тис.9 років тому

For more information on econometrics and Bayesian statistics, see: ben-lambert.com/

76 method of moments log normal distribution

Переглядів 12 тис.9 років тому

For more information on econometrics and Bayesian statistics, see: ben-lambert.com/

78 method of moments linear regression

Переглядів 14 тис.9 років тому

For more information on econometrics and Bayesian statistics, see: ben-lambert.com/

The intuition behind Jensen's Inequality

Переглядів 49 тис.9 років тому

For more information on econometrics and Bayesian statistics, see: ben-lambert.com/

Zero conditional mean of errors

Переглядів 4,3 тис.9 років тому

For more information on econometrics and Bayesian statistics, see: ben-lambert.com/

75 method of moments normal distribution

Переглядів 17 тис.9 років тому

For more information on econometrics and Bayesian statistics, see: ben-lambert.com/

Jensen's Inequality proof

Переглядів 61 тис.9 років тому

For more information on econometrics and Bayesian statistics, see: ben-lambert.com/

43 - Prior predictive distribution (a negative binomial) for gamma prior to poisson likelihood 2

Переглядів 8 тис.9 років тому

This video provides another derivation (using Bayes' rule) of the prior predictive distribution - a negative binomial - for when there is a Gamma prior to a Poisson likelihood. If you are interested in seeing more of the material on Bayesian statistics, arranged into a playlist, please visit: ua-cam.com/play/PLFDbGp5YzjqXQ4oE4w9GVWdiokWB9gEpm.html Unfortunately, Ox Educ is no more. Don't fret h...

44 - Posterior predictive distribution a negative binomial for gamma prior to poisson likelihood

Переглядів 13 тис.9 років тому

This video provides a derivation of the posterior predictive distribution - a negative binomial - for when there is a gamma prior to a Poisson likelihood. If you are interested in seeing more of the material on Bayesian statistics, arranged into a playlist, please visit: ua-cam.com/play/PLFDbGp5YzjqXQ4oE4w9GVWdiokWB9gEpm.html Unfortunately, Ox Educ is no more. Don't fret however as a whole load...

40 - Poisson model: crime count example introduction

Переглядів 10 тис.9 років тому

40 - Poisson model: crime count example introduction

41 - Proof: Gamma prior is conjugate to Poisson likelihood

Переглядів 33 тис.9 років тому

41 - Proof: Gamma prior is conjugate to Poisson likelihood

42 - Prior predictive distribution for Gamma prior to Poisson likelihood

Переглядів 14 тис.9 років тому

42 - Prior predictive distribution for Gamma prior to Poisson likelihood

37 - The Poisson distribution - an introduction - 1

Переглядів 8 тис.9 років тому

37 - The Poisson distribution - an introduction - 1

39 - The gamma distribution - an introduction

Переглядів 229 тис.9 років тому

39 - The gamma distribution - an introduction

38 - The Poisson distribution - an introduction - 2

Переглядів 9 тис.9 років тому

38 - The Poisson distribution - an introduction - 2

36 - Population mean test score - normal prior and likelihood

Переглядів 6 тис.9 років тому

36 - Population mean test score - normal prior and likelihood

33 - Normal prior conjugate to normal likelihood - intuition

Переглядів 11 тис.9 років тому

33 - Normal prior conjugate to normal likelihood - intuition

35 - Normal prior and likelihood - posterior predictive distribution

Переглядів 18 тис.9 років тому

35 - Normal prior and likelihood - posterior predictive distribution

34 - Normal prior and likelihood - prior predictive distribution

Переглядів 11 тис.9 років тому

34 - Normal prior and likelihood - prior predictive distribution

32 - Normal prior conjugate to normal likelihood - proof 2

Переглядів 20 тис.9 років тому

32 - Normal prior conjugate to normal likelihood - proof 2

31 - Normal prior conjugate to normal likelihood - proof 1

Переглядів 30 тис.9 років тому

31 - Normal prior conjugate to normal likelihood - proof 1

30 - Normal prior and likelihood - known variance

Переглядів 16 тис.9 років тому

30 - Normal prior and likelihood - known variance

Method of Moments and Generalised Method of Moments Estimation part 2

Переглядів 75 тис.9 років тому

Method of Moments and Generalised Method of Moments Estimation part 2

13 exchangeability what is its significance?

Переглядів 15 тис.9 років тому

13 exchangeability what is its significance?

where does the values of 0.25 and 0.4 comes from?

This is a triumph of excellent teaching. Thank you.

Cool video! I finaly understood it! There are not so much about this theme in the web... thank you!

I'd like to mention that we can go beyond the Monty Hall problem and touche on a fundamental issue in probability and statistics: the comparability of events with different sample spaces or magnitudes. 1. A first event with a magnitude of 3 (three doors) 2. A second event with a magnitude of 2 (two doors) While a great introduction into probability, the Monty Hall Problem only works if one accepts the comparison of two events of different magnitudes as logical. To dismiss people who cannot agree with this comparison as they do not get it is a problem if you ask me. I see the importance of highlighting both ways of thinking. In many contexts, comparing probabilities from events with different magnitudes or sample spaces is indeed problematic or even meaningless. For example, to provide another example of fundamentally different events: Comparing the probability of rolling a 6 on a die (1/6) with the probability of flipping heads on a coin (1/2) doesn't make much sense in isolation. In more complex scenarios, like comparing stock performance across different markets or time frames, not accounting for differences in magnitude can lead to serious misunderstandings. Additionally, people think if your initial choice was the car (which happens 1/3 of the time), then switching would be the wrong move. In this case, the host's reveal of a goat door doesn't help you at all. You've already won, and switching would make you lose. If one accepts the comparison of two events of different magnitudes, the Monty Hall strategy isn't about "always switch" but rather "switch because it's more likely you initially picked a goat." The host's reveal doesn't create new winning chances. The host doesn't change the fact that you probably (2/3 chance) guessed wrong at first.

Great explanation. Thanks for this 👏👏👏

Exactly, you go from picking 1/3 of the doors to 2/3 of the doors. It’s more about choices….

Lovely video Ben! As usual.

How could you say that theta is fixed when you integrate over it’s “different” values in 1:06

Thank you very much Ox, your class is extremely outstanding and great!

How dare I found this now.. Amazing works !

I am confused by p(a,b)= 1/3 x 1/2. Does this assume A and B are independent? If they are independent, p(a|b)=p(a); no need to go through all the rest of the derivation steps.

why did you write P(\theta) that way, isn't that the density function of \theta and not the actual probability ?

First day on Bayesian statistics. Lets go!

This was useful for me in understanding why with entropy and cross entropy with KL Divergence the Cross entropy will always be greater than the entropy (you have to flip the inequality though because the function in question is a -log which is concave

Can't believe I'm learning what people learned 9yrs ago and I'm surprised what are they doing now 😳

2:26

1:48

0:13 0:14 0:14

fantastic! absolutely fantastic explanation!

Would this also imply the reverse is true for a concave function? I.e., g(E(x)) > E[g(x)] ?

Stretching is dual to squeezing -- forces are dual. The Ricci tensor (positive curvature, matter) is dual to the Weyl tensor (negative curvature, vacuum). "Always two there are" -- Yoda. The Ricci tensor is the symmetric projection of the Riemann curvature tensor. The Weyl tensor is the anti-symmetric projection of the Riemann curvature tensor. Symmetry (Bosons) is dual to anti-symmetry (Fermions). Covariant is dual to contravariant -- dual basis.

Is an average football fan better educated (more sensitive to other cultures?), than average mathematician: ua-cam.com/video/3qqspUSxlJ0/v-deo.html

Yeah, except this is the probability of which door Monty opens, not the probability of you getting the car. The difference is that you counted only once on the either/or ⅓ side, but twice on the ⅔ side. The ⅔ side is also either/or, because the probability to get the car remains the same; Monty always opens the goat door. The chance for you to get the car remains the same with him opening either doors. So which is it, counting either/or door once, or twice? Either way it is ⅓ vs. ⅓ or ⅔ vs. ⅔ or 50/50. Guys, you’re wrong. You’ve made a mistake. Quit with your Reductio ad absurdum.You’ve allowed semantics to completely change what probability actually means. You’ve ignored the truth and continue to spread false propaganda. It’s not about debunking anything. Just get it right. 🤙

Assume you stay with your first pick. If your first pick is Goat A, you get Goat A. If your first pick is Goat B, you get Goat B. If your first pick is the car, you get the car. You only win 1 out of 3 games if you stay with your first pick. Switching means the opposite. It's just basic math/logic kids understand. Sadly, it's far too hard for idiots.

@@Araqius And here's cyber bully #1 spreading the wrong info. How much are they paying you to do this?! 1/3 of the time the car is behind Door A. 2/3 of THAT time the car is behind Doors B and C. Way to use the probability of where the car IS with the probability of where the car ISN'T to complete the equation. 👌 Also when Monty opens the goat door, you ignore, fail and REFUSE to re-attribute half of the probability of you getting the car back to you since you haven't done anything further than making your initial pick. Good one, man. Keep up the wrong work, dude. You and your drone are quite pathetic. 🤙

@@TristanSimondsen All you can do is bark? Not that i am surprised though, considering your parents. "Either way it is ⅓ vs. ⅓ or ⅔ vs. ⅔ or 50/50." Only a complete idiot like you or your parents would make a super stupid sentence like this. Imagine the host say "I am going to give you both door", what is your winning chance? Tristan, the idiot among idiots: The chance for my door VS the other door is 2/3 VS 2/3 so 2/3 + 2/3 = 2/3. Tristan, the idiot among idiots: This means my winning chance is 4/3.

@@TristanSimondsen Tristan, the idiot amonmg idiots: Either way it is ⅓ vs. ⅓ or ⅔ vs. ⅔ or 50/50. Tristan, the idiot amonmg idiots: The chance that my door is the car is 2/3 and the chance that the other door is also 2/3. Host: I am a good guy so I will give you both doors. Tristan, the idiot amonmg idiots: Now, the chance I get the car is 4/3. Tristan, the idiot amonmg idiots: I am a genius. Hoooraaay!!!

Where did the N choose X part go though?

Either I’m dumb as fuck or I’m just not paying attention like WTF if I wanted school I’ll go back for that lol more simple please

Likelihood is a probability, it can vary in the range [0 ; 1], it's a probability of "data", the sum of all those probabilities for each value of theta does not integrate to 1, but what's the point.

Dude, THIS is the kind of education worth paying for, not whatever my professors are doing

*I can prove the true nature of this 'problem'!* .. they trick you into thinking you have 'chosen' the first door. Think of it this way: the first round of the game is the fake 'choice' where you don't actually choose, you 'reserve', to protect one door and let one goat be eliminated from the game, 1st round is now done! Now they start the second game where the REAL choice is allowed, there are no longer any 'reserved' doors and the game parameters have now changed to allow 50/50 odds because you get to play this game from scratch with two doors and for the FIRST time get to actually 'choose' instead of 'reserve/protect', which is very different than the first game's round of choices..it's TWO different games NOT one, so if you do not account for this by creating two separate math problems then you are NOT accurately representing the true nature of the game, as you have to change the odds for all 'unknowns' every time you eliminate possibilities. This logic applies to different examples of this 'problem' as well. The key is understanding that the only real game is when the final choice is made, and that everything before that is just changing the parameters, you have to make the math adjustment for the new parameters as they change, it all comes down to the *state of the parameters* when you *actually choose* and NOT when you are simply 'negotiating' the parameter changes, ..thanks and you're welcome;)

Now test that theory in real life or in a simulation

Assume you stay with your first pick. If your first pick is Goat A, you get Goat A. If your first pick is Goat B, you get Goat B. If your first pick is the car, you get the car. You only win 1 out of 3 games if you stay with your first pick. Switching means the opposite. It's just basic math/logic kids understand. Sadly, it's far too hard for idiots.

@@Araqius exactly, the illusion comes in when you just look at what is actually in front of you at the time and disregard the past process. In either case you are looking at, what appears to be a binary equation with the statement 'it HAS to be inside one or the other' being 100% true so some people assume: "how could two identical scenarios have different properties? it has to be 50/50 no matter what!" I like to say:"think of splitting a shuffled Deck of cards into two piles, but then you remove half the Spades from one pile and balance the amount out using just Diamonds from the other half. One pile has a lot more red cards in it, so it is more likely one pile will give you a red card and the other will give you a black card, even though there are only two piles" eliminating the door in the game is what 'takes the spades away', so lol, yeah, everyone is trying their best to describe being right so i took the other-side and did my best at describing the logic 'in being wrong'..did i win? (P.S. people who actually don't get this should never gamble)

Each sheep is also an independent and unique individual. This game cannot mislead players by treating every sheep as the same thing. There are three options, two of which also stand on their own. If the player can tell them apart, there is no chance of winning like 2/3

Is the reality of quantum mechanics more logical than what the math? The player never knows which goat is the one revealed.。.. If life is an endless choice, then if we face it with this way of dealing with things, then what aspect of human nature is eternal? Some people always want to control the world and act as the so-called "God"?

The sound is just awful. Seems like the presenter's microphone is 50 feet away from the presenter.

Maybe thinking this way....... Try to understand it from the perspective of the host. The player provides his prediction to the host. The host opens a pair of doors with sheep, leaving only two pairs of doors, one sheep and one car, for the player to choose. If the player predicts the door of the car, what will the host do? of couse Want the player to change their choice. The host asks you to calculate the odds with misleading you already have once choice before. you swapping your choice, 2/3 will win. On the contrary, the player predicted the door with the sheep. What will the host do to make the player still choose the door with the sheep? you can tell. ........ Dawyer's door problem, calculate the chance of the host winning.

I come back to this video so many times now really good thank you!!

Thank you! Great explanation

I have question about the result here. We find that the posterior distribution is N(thetaPrime, sigmaPrime^2) and you have given the formulas for thetaPrime and sigmaPrime^2. Now, the posterior distribution is, of course, the distribution we get when we combine the data from our observations together with our prior, so I'd expect that thetaPrime and sigmaPrime would contain the information we gained from our observations. Indeed, thetaPrime depends on the number of observations N as well as the mean of the observed values, xBar. However, sigmaPrime appears to only depend upon the number of observations N, and it seems entirely agnostic as to what the values of the observed xi's are. That is, no matter how wildly different the observed values of xi are from thetaNot (the presumed, uninformed mean prior to applying any data), the variance of the posterior distribution will be completely unaffected by the observations. As a more concrete example, suppose the measured IQs were scattered from 50 to 210 instead of being clustered in the 50s-80s. I would think sigmaPrime would need to grow to reflect the enormous variation in the observed values, but the formula you've derived implies that it does not care what the values are. Is this a consequence of your assumption that sigmaX is a "constant"? Is the result still valid?

I think we are not adding the lengths but areas of slices in the integral. For the length you'd need to integrate over square root of H' + W'

Wow,you explained it really well

Ily

What if we want the prior to carry more weight? I realise that we could do this by making it more extreme and concentrated, but what if we don't want to do that. For example, what if I have seen something happen a million times previously but never recorded the data, but I trust my memory and have a strong handle on what I believe distribution of the parameter should be. Then we receive 1000 new observations (a high number, but nowhere near the million unrecorded), we might not want the data to dominate in such a circumstance

Your memory on seeing it happen a million times before is what influences the prior to be so extreme. If you made the prior less extreme that would be contradicting your memory.

Best to think of the prior as an 'informed guess' based on your knowledge of the system. The strength of the prior function will depend on how well you know the system. In your case of seeing it a million times your informed guess should be pretty accurate, therefore making the prior more extreme. On the other hand if you knew nothing about the system your prior would be a flat beta(1,1) distrubution and the posterior would only be influenced by the measurements you make after your 1000 new observations.

@@shokker2445 i may be missing something, but what if although seeing it happen a million times, it was quite variable, such that I had a really good handle not just on the location, but the uncertainty around it. Would this need to be Weighted in some way so that it isn't the dominated by the new 1000 cases? Let's say they are all found in one tail of the original prior

Well the prior would be weighted very strongly since you have a good understand of the system. It doesn’t matter whether it is a binomial distribution (coin toss for example) or a completely flat beta(1,1) distribution (such as a random number generator). The weighting of the prior is determined by how well you know the system. After a million previous measurements you should have a good idea and therefore it will be weighted much more strongly than the 1000 measurements. Those 1000 measurements could alter the system slightly to get the posterior, and you could then use that posterior as a new prior for another 1000 measurements. This cycle can be repeated as many times as you like.

Great video here! Thanks!

Very clear explanation, thank you!

This is brilliant, thx

Thanks! The concepts and the derivation were clearly explained.

know variance, but to you know the mean?

People who say math isn't useful later in life. I'm a data scientist, this stuff is still very relevant. Also 90% of your job probably have some data product tied to it, all this is used in there as well.

There could be a population of England France games if you believe in the many worlds theory, which many do

Thank you for your time and effort.

Thank you for your time and effort.

DONT DO UNUSEFUL VIDS PLEASE!!!!!!

Just because they are USELESS to you does not mean they are useless to other people. He did a fine job of explaining this to people that did not already know what it means. So either you already know the subject, which means the video is not for you, or you did not catch the subject (not everyone will…. And some takes time)

every single lecture/description in stats i come across always describes x1,x2,x3...are random variables rather than just stop using that terminology and just calling them instances of 1 random variable. : once x1 ,x2 are there they are not random variables , there is only 1 random variable here isnt that the case

this was a good video but would be nice to emphasize where a bayesian approach would be practically useful as opposed to the classic approach : in a nut shell Why do this?

this particular example if formulated in a very confusing way and has errors in the audio vs what is on the equations